A hash table is data structure that is used to search for exact matches to a search key. For searching, they work like this:

- Take a search key (example: the word “cat”)

- Hash the search key: pass the key to a function that returns an integer value (example: the hash function returns 47 when the input key is “cat”)

- Use the integer value to inspect a slot to see if it contains the search key (example: look in an array at element 47)

- If the key matches, great, you’ve found what you’re looking for and you can retrieve whatever metadata you’re looking for (example: in array element 47, we’re found the word “cat”. We retrieve metadata that tells us “cat” is an “animal”)

- If the key doesn’t match and the slot contains something besides the key, carry out a secondary search process to make sure the search key really isn’t stored in the hash table; the secondary search process varies depending on the type of hashing algorithm used (example: in array element 47, we found the word “dog”. So, let’s look in slot 48 and see if “cat” is there. Slot 48 is empty, so “cat” isn’t in the hash table. This is called linear probing, it’s one kind of secondary search)

I had a minor obsession with hash tables for ten years.

The worst case is terrible

Engineers irrationally avoid hash tables because of the worst-case O(n) search time. In practice, that means they’re worried that everything they search for will hash to the same value; for example, imagine hashing every word in the English dictionary, and the hash function always returning 47. Put differently, if the hash function doesn’t do a good job of randomly distributing search keys throughout the hash table, the searching will become scanning instead.

This doesn’t happen in practice. At least, it won’t happen if you make a reasonable attempt to design a hash function using some knowledge of the search keys. Indeed, hashing is usually in the average case much closer to O(1). In practice, that means almost always you’ll find what you’re looking for on the first search attempt (and you will do very little secondary searching).

Hash tables become full, and bad things happen

The story goes like this: you’ve created a hash table with one million slots. Let’s say it’s an array. What happens when you try and insert the 1,000,001st item? Bad things: your hash function points you to a slot, it’s full, so you look in another slot, it’s full, and this never ends — you’re stuck in an infinite loop. The function never returns, CPU goes through the roof, your server stops responding, and you take down the whole of _LARGE_INTERNET_COMPANY.

This shouldn’t happen if you’ve spent time on design.

Here’s one solve: there’s a class of hash tables that deal with becoming full. They work like this: when the table becomes x% full, you create a new hash table that is (say) double the size, and move all the data into the new hash table by rehashing all of the elements that are stored in it. The downside is you have to rehash all the values, which is an O(n) [aka linear, scanning, time consuming] process.

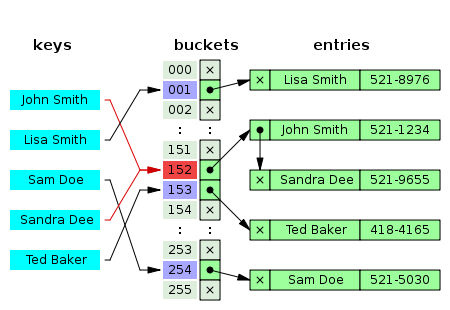

Here’s another solve: instead of storing the metadata in the array, make your array elements pointers to a linked list of nodes that contain the metadata. That way, your hash table can never get full. You search for an element, and then traverse the linked list looking for a match; traversing the linked list is your secondary hash function. Here’s a diagram that shows a so-called chained hash table:

A chained hash table, showing “John Smith” and “Sandra Dee” both in slot 152 of the hash table. You can see that they’re chained together in a linked list. Taken from Wikipedia, http://en.wikipedia.org/wiki/Hash_table

Chained hash tables are a very good idea. Problem solved.

By the way, I recommend you create a hash table that’s about twice the size of the number of elements you expect to put into the table. So, suppose you’re planning on putting one million items in the table, go ahead and create a table with two million slots.

Trees are better

In general, trees aren’t faster for searching for exact matches. They’re slower, and here’s peer-reviewed evidence that compares B-trees, splay trees, and hashing for a variety of string types. So, why use a tree at all? Trees are good for inexact matches — say finding all names that begin with “B” — and that’s a task that hash tables can’t do.

Hash functions are slow

I’ve just pointed you to research that shows that must not be true. But there is something to watch out for: don’t use the traditional modulo based hash function you’ll find in your algorithms text book; for example, here’s Sedgewick’s version, note the “% M” modulo operation that’s performed once per character in the input string. The modulo is used to ensure that the hash value falls within the size of the hash table — for example, if we have 100 slots and the hash function returns 147, the modulo turns that into 47. Instead, do the modulo once, just before you return from the hash function.

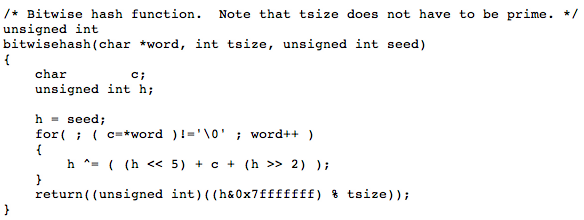

Here’s the hash function I used in much of my research on hashing. You can download the code here.

A very fast hash function written in C. This uses bit shifts, and a single modulo at the end of the function. If you find a faster one, would love to hear about it.

Hash tables use too much memory

Again, not true. The same peer-reviewed evidence shows that a hash table uses about the same memory as an efficiently implemented tree structure. Remember that if you’re creating an array for a chained hash table, you’re just creating an array of pointers — you don’t really start using significant space until you’re inserting nodes into the linked lists that are chained from the hash table (and those nodes typically take less space than nodes in a tree, since they only have one pointer).

One Small Trick

If you want your hash tables to really fly for searching, move any node that matches your search to the front of the chained list that it’s in. Here’s more peer-reviewed evidence that shows this works great.

Alright. Viva Hash Tables! See you next time.

Hugh,

Thank you for the post! Love it! I am one of those engineers who is obsessed with Hash Tables.

Isn’t it true that every hash function has its pathological data set, and hence the use of universal hashing? I believe its not easy to come up with one best hash function that performs well with every possible data set in the universe.

Great post! How about a followup talking about one of my favorites — perfect hash functions, for the special case when you know all of your inputs?

The problem is that if you don’t resize your hash table (the O(N) operation), you can end up with O(N) searches if you greatly misjudge the initial size. If you create a hash table with 100 entries, and stick a million elements into it, the average node is going to have 10,000 entries in it’s linked list- and a search takes O(10,000) time. In general, search takes O(N/k) time for a k-bucket hash table. The nice thing about trees is that they “fail safe”- a million element tree is only ~3x slower than a 100 element tree. The base speed is slower, but you don’t fall off a cliff if you screw up (are the subject of an exploit).

Argent Logophile: it’s easy to prove that all hash functions have pathological data sets, via the pigeon hole principle.

Is your proposed hash function really that good? Because Bob Jenkins’ fast hashes are significantly more complex; he considers key distributions in depth. http://burtleburtle.net/bob/hash/doobs.html https://en.wikipedia.org/wiki/Jenkins_hash_function

Does anyone actually believe any of those 5 myths? I often ask interview candidates—at a well-known Internet company—to implement a hashtable, and I’ve never encountered anyone who could implement a hashtable yet suffered from these misconceptions.

(1) The worst-case O(n) performance is only really relevant in adversarial situations, when an attacker is trying to deny service; it never happens by chance unless you’ve screwed up your hash functions.

(2) The use of linked lists for buckets is nearly universal, AFAICT.

(3) Hash functions may be slow for custom objects, but they can often be computed on first use and saved in an object field.

Also, it’s better not to do “mod M” in your hash function at all: the hashtable logic should be doing this, since it knows the current size of the table, and it may vary as resizes occur. Sedgwick’s style dates from a time when users would roll their own type-specific fixed-size (M) hashtable rather than use a generic library implementation.

(4) Trees have log(n) insert/update/delete time, so obviously they’re slower unless they’re very small. One uses a tree when you want an ordered set or map.

(5) Empty hash tables usually preserve some space (e.g. 10 words) so they do use more space than an empty tree, and since “most collections are empty” this can be a waste. I suspect that’s why C++ programmers still prefer std::map when they don’t expect it to get very big.

Java, Python and Go programmers use hashtables like there’s no tomorrow—far more than they use red/black tree alternatives. C++ users seem to use both about equally in my experience.

From which group did you hear these myths?

Not bad, an eye opener but ease of using Hashtable is something more appealing than trees.

Just finished reading both the papers cited as evidence in the post. Really enjoyed reading the extensive treatment of burst tries! Thank you!

As has been pointed out, the issue arises when you have an adversarial input. Read the relevant BlackHat talks on computational complexity attacks. A lot of software systems are used in security critical applications nowadays, and you can’t just have a controller in a 747 stall because of nasty input values — and yes, 747’s also run off the shelf unixes nowadays

Normally small and medium size applications should not use hash tables with millions of rows in their memory space. The design/architecture of this type of applications should include a Database Engine behind in order to handle big tables. Under this conditions you won’t have issues you are mentioning here with hash tables (and others) because the DB Engine will do that for (and better than) you.

Could someone please illustrate an example of an application in a valid scenario that requires handling a big hash table by itself? But please, make sure that adding a database engine was not the best answer for your example.

mmmm… Jorge. The article is about hashtable myths, no? Not about DB engines vs hashtables.

Nevertheless, to anwer your question… twenty-plus years ago I wrote a full text search for a CD product (a collection of articles). The text and index were fixed. The base target computer was a 386 with a 2X CD reader, running Windows 3.1. I used a large closed hashtable with two finely tuned hashing functions to minimize the chain length. I was able to achieve subsecond full text search on this ridiculously slow platform, generating O(n) I/O to the CD drive, where n signified the number of non-noise words in the phrase being searched. The second hash value let me avoid strcmp on search values, (vastly) far enough from the primary hash to let me measurably guarantee no primary+secondary hash collisions.

And Chris…

Mind you, the example hashing function above is just a starting point when developing like I did, with a fixed dataset. I experimented for days to reach an optimal chain length < 3 compared to table size.

In other words, you are allowed stand on someone else's shoulders and improve on their work when you need to. I rarely need to tune hashing functions now since they're rarely a bottleneck. (Don't optimize the fast parts, ya know what I mean?)

return((unsigned int)((h&0x7fffffff) % tsize));

It concerns me when folks use return as if it were a function. No harm, no foul, tho.

When I have a hash conflict, I just put the item into the next free slot. So at that point future lookups become a linear search with small N — quite simple and effective. The key is to keep N small for your dataset.

I would note that there are ways to avoid worst-case search time, e.g.: cuckoo hashing and perfect hashing. These are not ideal, of course. A perfect hash function may not materialize (a small probability, but I have seen this in practice). And it can in fact be slower than regular hashing. Cuckoo hashing, AFAIK can also suffer from adversarial input, but only at the time of construction.

I agree about different hashing functions being essentially same: computation of a hash function takes very little. A random access to a memory cell takes long: hundreds of CPU cycles.

Final note: you can replace a hash with a trie. Runtime is comparable and I saw some tries being faster than hashes.

Pingback: Five Myths about Hash Tables « thoughts…

Great post indeed. Being a java developer, most people struggle to choose between HashMap and HashTable and that’s it.

Your research will force them to think more, including me.

Pingback: Hash Tables | Jeff Plumb

Pingback: Reflecting on my hash table post | Hugh E. Williams

Pingback: Armchair Guide to Data Compression | Hugh E. Williams

Some of these “myths” are actually very true. What you say is true only for string keys, but what about hashing variable length int arrays? Then all your myths may become reality, and the sad thing is that it is usual to hash custom keys.

Pingback: 2012年12月计算机知识整理(上) | Daniel Hu的技术博客

One of the good post on HashTable. Your post is really helping people to understand HashTable when to use and when not to use.

Pingback: Jak optimalizovat deoptimalizací | Funkcionálně.cz

Pingback: Kolize hashů pro mírně pokročilé | Funkcionálně.cz

I’ve been a big fan of hash tables for more than 25 years.

However, recently (i.e., past ten years or so) I’ve been working on a project with big NUMA (non-uniform memory access) SMP (symmetric multiprocessor) machines. Like, 256 cores, 2 terabytes of RAM.

Here, VERY surprisingly, hash tables are MUCH slower than other techniques. This is because when you go out of cache, you can wind up accessing RAM on another board in the computer, and it’s really fast interconnects, but still it’s like 70 times (literally) slower to get there.

So hash tables, since by definition they jump all over the place, are often the *slowest* way to do something. We were doing some counting of unique values, and found that having each thread collect an array of values, and periodically sort and uniq the array was MUCH faster than using hash tables.

As always, benchmarks are your friends, and relying on dogma isn’t 🙂

I do hash arrays all the time in AWK:

LUN[WWN] = “something”;

Order[WWN] = ++Count;

how can I ever run out of “slots”, as long as there is virtual memory available?

How can I ever “misplace” “something” in the wrong slot, if the assumption that the world wide number (WWN) is unique holds true?

Hi Chris, thanks for the comment (this post lives on!). You might want to read the papers in the links – they aren’t full of dogma, they are full of experiments / benchmarks. Hugh.

The trouble with hash tables is they require you to fiddle with them. You can tune the hash function. You can tune the table size or load factor. If your table is dynamic like C++ std::unordered_map, you can tune the maximum number of collisions before a rehash.

Tree-based maps are slower than hash tables, but they’re not that much slower, a factor of 3x in my personal testing (100k keys). Hash tables are held in such reverence that I assumed they were way faster, like 10x or more, but this isn’t the case.

Thanks for this interesting article. I want to provide a more complete answer to the question “So, why use a tree at all?” For one thing, to get the advantages of immutable, persistent data structures, e.g. the ability to backtrack, and lock-free concurrency. Persistent data structures are awesome!

Also, remember that only a small proportion of your code really contributes materially to the overall performance of your application. If you have profiled an app that is too slow and it turns out that you are spending a lot of the time doing tree lookups, then sure, if you don’t need a persistent data structure, consider using a hash table. But if using a hash table instead of a tree in a particular place is going to make your app 0.000001% faster, then who cares? Any other consideration is more important.

Also, some applications need lots of small dictionaries which very often contain 0, 1, or 2 entries. For example, a compiler might keep a map of a function’s arguments by name, or a map of a function’s type parameters by name. Immutable trees perform fantastically in these cases. For example, an EmptyMap class’s lookup function might be “return nil”, and a Map1 (Map containing one entry) class’s might be “return keyToFind == key ? value : nil”. Those beat the snot out of a hash lookup! In addition, such trees use very little memory.

And finally, trees can be ordered, allowing you to iterate through the entries in a desired order without having to sort them.

So trees do have their uses!

Hello,

I am a retired software engineer. The last 15 years of my career was spent as a Sr. Fellow for two of the top telecommunications firms in the USA. I developed a lateral hashing system for call record processing (hash to first cell, linked list for collisions). We were able to identify customer information for 80 million calls (at the time 1 day’s traffic) in 20 minutes. This would be very difficult (and perhaps even unlikely) using anything other than hashing. The algorithm was a variation on one presented in Aho’s Dragon book. All done in ‘C’ on an array of HP 9000 computers.

This process was completed in 2002.

Larz60+ (python-forum.io)

Myth 6: They need a load factor (false).

Chained tables can be filled to 100%. Also, the linked list nodes do not need to be allocated as separate objects. A single Node[] that has the same size as the Bucket[] suffices. This is super efficient. The .NET Dictionary class does this.

Hash tables are fast only when their hash function is fast. Hash operations are dependent on the hash function. Most hash functions are not fast (as in a single computation without a loop fast). The performance of hash is not as good as people think. In general, assuming a good hash function, is it similar in performance to other techniques. In the ideal case of a truly fast hash function then it is better than other techniques and should be used. Memory use is similar to other data structures. Hash is good, but sometimes over rated.

Are you aware of any decent hash functions that are fast?

Adding to what Joseph said, hash tables are a win when they can eliminate many equality checks between *unequal* entities. If there are not going to be enough of those != checks made, then the hash function is never going to pay for itself. This can easily be the case when there are only a few elements in the table — you are better off with a tree. However, we should point out that some language runtimes cache the hash value of an object, so repeated lookups with the same key values are fast.